DataChain Blog

Find here DataChain news, findings, interesting reads, community takeaways, deep dive into machine learning workflows from data versioning and processing to model productionization.

The Neuro-Data Bottleneck: Why Neuro-AI Interfacing Breaks the Modern Data Stack

Neural data like EEG and MRI is never 'finished' - it's meant to be revisited as new ideas and methods emerge. Yet most teams are stuck in a multi-stage ETL nightmare. Here's why the modern data stack fails the brain.

Parquet Is Great for Tables, Terrible for Video - Here's Why

Parquet is great for tables, terrible for images and video. Here's why shoving heavy data into columnar formats is the wrong approach - and what we should build instead. Hint: it's not about the formats, it's about the metadata.

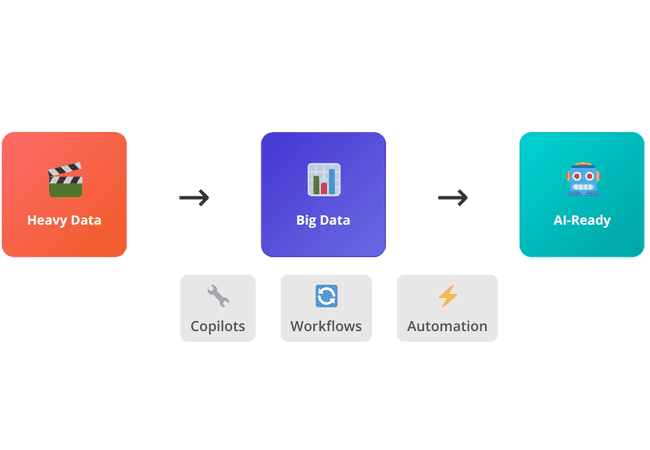

From Big Data to Heavy Data: Rethinking the AI Stack

LLMs can finally interpret unstructured video, audio, and documents — but they can't do it alone. This post introduces the concept of heavy data and explores how modern teams build multimodal pipelines to turn it into AI-ready data.

As GenAI Fever Fades - Time to Prioritize Robust Engineering Over Overblown Promises

Improved Engineering and Data Management will be what carries GenAI into maturity

Scalable PDF Document Processing with DataChain and Unstructured.io

Extract and parse text from documents and create vector embeddings in a scalable and distributed way (and less than 70 lines of code).

Post-modern AI Data Stack

How and Why Generative AI will change the modern data stack.

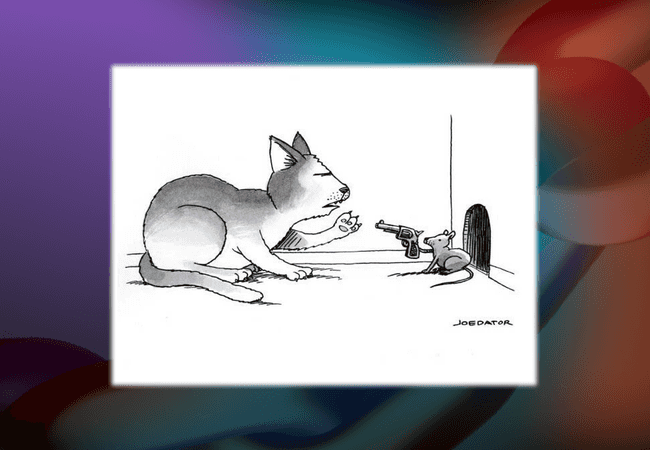

You Do the Math: Fine Tuning Multimodal Models (CLIP) to Match Cartoon Images to Joke Captions

Learn how to fine tune multimodal models like CLIP to match images to text captions.