From Big Data to Heavy Data: Rethinking the AI Stack

From Big Data to Heavy Data

LLMs can finally interpret unstructured video, audio, and documents — but they can't do it alone. This post introduces the concept of heavy data and explores how modern teams build multimodal pipelines to turn it into AI-ready data. The key: storing structured outputs like summaries and embeddings to support agents, copilots, and future workflows — without reprocessing everything from scratch.

Introducing heavy data

AI is redefining what data looks like. Large language and vision models can now process videos, audio, PDFs, MRI scans, and images — extracting summaries, objects, transcripts, and meaning from large raw files that once sat untouched. This shift introduces a new category: heavy data.

Heavy data is large, messy, and multimodal. It lives in object storage, not tables. It can't be queried with SQL. And until recently, it's been too costly and complex to work with at scale.

The industry have mastered big data — structured logs, metrics, and transactions turned into queryable datasets using a mature ecosystem of tools. But heavy data remains largely untapped, despite holding even richer insights. Now that AI can interpret it, the question is: how do we make heavy data AI-ready?

Why AI-Ready Data Matters

Consider a robotics team building AI-powered systems. They need to analyze thousands of hours of robot video logs and answer questions like when did the robot get stuck? or when did it fail to complete a task?

They can't simply throw full-length videos into an LLM — the files are too long, and context windows are too limited. Instead, they build a multimodal pipeline: split videos into 10–30 second clips or extract frames, run ML or LLM models to describe each segment, and persist those intermediate results. Then, downstream prompts or systems can query those summaries to answer high-level operational or debugging questions.

This persistence is key. Running full video processing every time is expensive. By storing outputs like clip-level descriptions or tags, the team creates AI-ready data that can be reused across multiple use cases — without reprocessing raw video.

The same pattern applies to unstructured documents like PDFs or PowerPoints. Before LLMs can assist or generate insights, the content must be chunked, summarized, and indexed. Without this prep layer, the data is invisible to AI.

The most effective teams treat this process not as ad-hoc tooling, but as a foundational part of their stack — building pipelines that turn heavy data into structured, AI-ready context for data analyses or building RAG applications.

AI-Ready Also Means Agent-Ready

In many modern workflows, it's not humans who directly query or process the data — it's AI agents. These agents need structured, pre-processed, and semantically enriched inputs to be effective.

AI-ready data is agent-ready data: data that can be consumed by autonomous systems, copilots, and task-chaining agents without requiring reprocessing or manual labeling. Agents need more than just access to files — they need context. They need summaries, tags, embeddings, and lineage to reason effectively, take actions, and build on prior steps.

Without AI-ready data, agents remain limited — repeating work, agent tools are too long to call, hallucinating answers, or failing to act entirely.

From Raw to AI-Ready: A New AI Stack

Traditional data pipelines assume data is already structured and ready to use. But with today's LLM-based workflows, teams start with raw content: hours of video, mountains of slides, or thousands of un-curated documents. That data has to be transformed before any meaningful interaction — human or agent-driven — is possible.

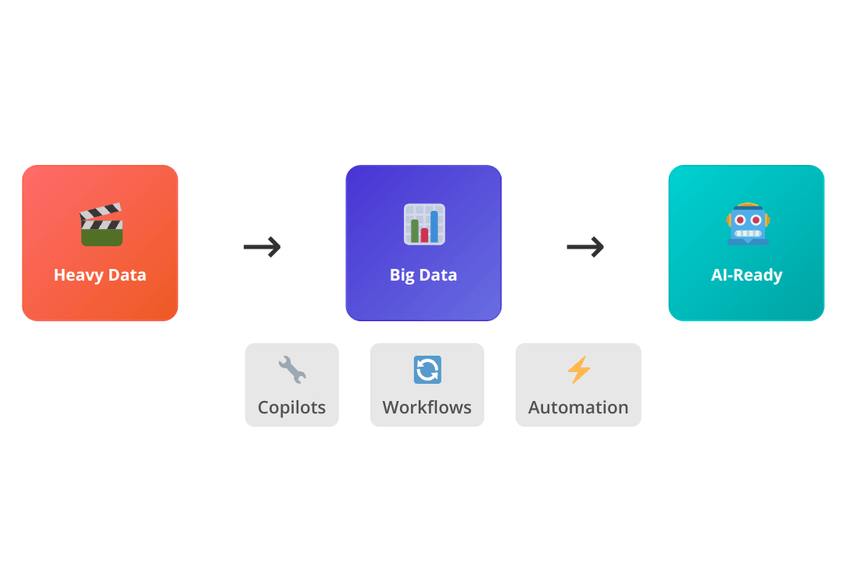

The new AI-native data flow looks like this:

Heavy Data → Big Data (Structured) → AI-Ready Data

- Heavy Data: raw, multimodal files in object storage

- Big Data: structured outputs (summaries, tags, embeddings, metadata) in parquet/iceberg files or inside databases

- AI-Ready Data: reusable, queryable, agent-accessible input for workflows, copilots, and automation

This new flow demands more than just LLMs — it demands best practices and infrastructure that can handle scale, memory, and structure.

What Comes Next

Every enterprise has unstructured, heavy data — and now, for the first time, AI can extract meaning from it. But running models isn't enough. You need to capture that meaning, structure it, and make it reusable across workflows and systems.

The most advanced teams don't just build models. They build pipelines that turn raw files into knowledge — and systems that remember what's already known. And in many cases, it's agents — not people — that rely on that memory.

In this new AI-native stack, the real differentiator isn't the size of your model — it's how well your data is prepared for autonomous use.