Parquet Is Great for Tables, Terrible for Video - Here's Why

Stop Shoving Your Videos Into Parquet

The Hidden Cost of Heavy Data

If you’re working with multimodal data - massive video archives, endless audio recordings, point clouds, MRI scans, or sprawling image datasets - you already know the struggle:

- You spend hours - sometimes days - just to find the 12 clips where a specific car and a pedestrian appear together.

- You know you'll get the best insights by looking directly at your data - but the impossible task is figuring out where to even start looking.

- You need to measure a simple coverage - how many examples of a rare animal or noisy recording you actually have - but that means re-running expensive jobs on petabytes of raw data.

- Every time you adjust your labels or model training subset, you're stuck reshuffling terabytes of files before you can even test an idea.

It's like trying to search a library by flipping through every book, page by page.

We Hear This All the Time

Over the past few years, we've worked closely with multiple data and AI teams facing these same challenges. One question comes up again and again:

Why don’t we just put everything — even the images — into Parquet or Iceberg?

At first, it feels like a neat shortcut. Some teams even start down this path. But often, they give up once performance collapses, files bloat, and workflows grind to a halt.

This post is a warning drawn from those experiences: what looks simple at the start can quickly turn into a trap. Let’s unpack why.

Why Metadata Changes Everything

The natural response is to stop running heavy operations on raw data for every query. Instead, teams precompute metadata - or "features" - about their datasets:

- What objects appear in each frame of video

- What is a noise level in each of the audio segments

- Sentiment scores in text messages

These features are lightweight and structured. Suddenly, you can query, filter, and aggregate at database speed instead of waiting for compute jobs to finish.

Why Teams Try It Anyway

On the surface, it seems appealing to put everything - structured metadata, plus raw multimodal data like video, audio, and images - in one Parquet file. Teams often do this for three reasons:

- Simplicity: A single container feels easier to manage than juggling object storage + metadata tables.

- Governance: Storing everything together avoids broken links when files move or are renamed.

- Cost / consolidation pressure: Some teams already use Parquet heavily for analytics, so extending it to raw data feels like “one system for everything.

The catch? Those quick wins collapse the moment your data gets heavy. What looks neat at first ends up creating bloated, inefficient, and fragile systems.

The Trap: Shoving Blobs into Parquet

Here's where things go wrong. Many teams reason:

If Parquet is great for structured data, why not store the raw videos, audio, or images in it too?

Sounds clever, right? It's a trap. Parquet wasn’t built to move or analyze gigabytes of binary payloads inside each row. It bloats your files, complicates processing, and slows everything down - the exact opposite of what you wanted.

What you lose when you stick images and audio into Parquet:

- You throw away format advantages (fast decoding, seeking, rendering).

- You slow down queries - nothing can be filtered until the blob is pulled back out. It’s not a single blob but a block - this is how Parquet actually works.

- You ** hurt tooling** - image viewers and libraries expect real JPG/MP4 files, that you have to make an extra step extracting.

- And when you do want to look at the data yourself (the best insights, remember?), you’ve made it harder - the file has to be unpacked first.

And even newer table formats like Apache Iceberg don't solve this problem: they help with versioning and schema evolution. Parquet itself (The two versions of Parquet) Python l) is evolving to handle ML/AI use cases like thousands of feature columns per file - but neither is designed to handle heavy binary data efficiently.

Benchmark: Why Parquet Breaks for Audio

And this isn't just theory - we ran the numbers. We tested 1,000 audio files in S3 with 10 threads across different formats.

| Type | Throughput (files/s) | Total time (s) | Slowdown |

|---|---|---|---|

| mp3 | 46.42 | 22.45 | 1.00 |

| flac | 18.58 | 54.52 | 2.43 |

| wav | 10.53 | 96.74 | 4.31 |

| npz | 11.92 | 84.70 | 3.77 |

| npy | 7.25 | 138.78 | 6.18 |

| parquet bulk | 2.32 | 431.43 | 19.21 |

| parquet lookup | - | hours | 100x+ |

What We Learned:

- Native formats win: MP3, FLAC, WAV are efficient and optimized.

- NPZ/NPY fail: We even tried saving waveforms/channels separately - it didn't help. Media containers already handle stereo channels efficiently.

- Parquet collapses: Bulk reads is okay but still slower than other containers. Selective lookups take hours! Each lookup downloads a whole block of data - massive overhead.

- Worst of all: burying binaries means you can't just open or inspect them

- the data is locked away, invisible to humans.

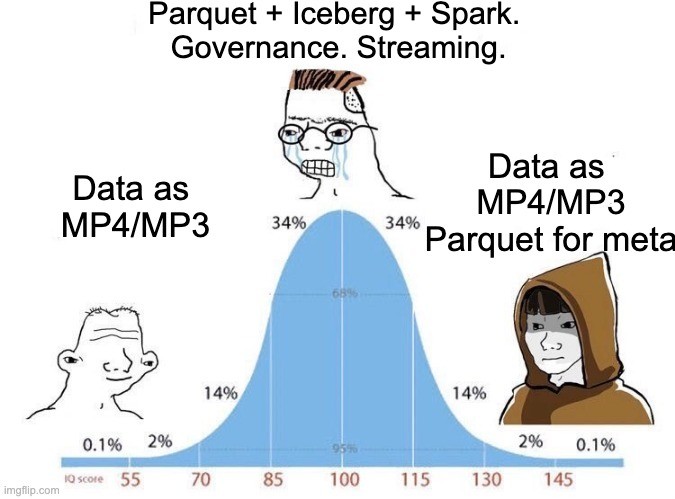

👉 Keep audio (and other media) in native formats. Use Parquet only for metadata.

Keep Heavy Data Where It Belongs

So what's the alternative? Avoid burying your media inside Parquet - there's a better way. Let each part of your dataset do what it was designed for:

- Keep binary data in object storage (like S3), in the formats designed for them.

- Keep metadata separate, in Parquet or a similar structured format.

- Bridge the two with references (paths, IDs, frame numbers, timestamps).

This gives you the best of both worlds: lightning-fast queries on structured metadata, and effortless playback or inspection from object storage.

And it connects back to what you really want: when it's time to dive in and look at the data yourself to uncover insights, you're still opening a JPG, MP4, or WAV - not untangling a massive Parquet blob or putting another tool between you and data.

The Hard Part: Keeping Metadata and Binaries in Sync

Linking metadata to raw files sounds easy - just store paths, IDs, frame numbers. But in practice it's fragile:

- Files get renamed or deleted → metadata breaks.

- New files appear in S3 → metadata falls out of date.

- Features are re-computed → they no longer match the right version of the data.

To avoid this, some teams attempt to pack the actual JPGs, MP4s, or MP3s into Parquet. That does keep metadata and data in one place - but at a huge cost: giant bloated files, slow queries, and you lose the built-in efficiencies of formats like MP4, MKV, JPG, PNG, or WAV.

This is where a system like DataChain helps - filling the gap without forcing binaries into the wrong formats. It gives you the same consistency guarantees Parquet users are chasing, but without the penalty of locking binaries inside the wrong container. DataChain:

- Tracks exact versions of every file using etag and cloud versions, so references don’t break when files move or change.

- Auto-updates metadata when new data is added or features are recomputed.

- Keeps binaries in native formats on S3/GCP/Azure, while metadata lives in Parquet or another query-friendly store.

With DataChain, your metadata and your raw data stay perfectly aligned. You keep the speed and efficiency of native formats and the reliability of structured queries - no compromises.

👉 Parquet for metadata. Native formats for media. Consistency by DataChain.

Life After Solving It the Right Way

Once you adopt this separation-with-consistency approach, the pain disappears and iteration accelerates:

- Find the needles instantly - query metadata in seconds to locate exactly what you need.

- See the data itself - jump straight into the right clip or image, without hours of scrubbing.

- Measure coverage at scale - surface rare cases and hidden patterns without reprocessing terabytes.

- Experiment faster - build and rebalance training subsets across multiple modalities on demand.

The outcome: fast queries, reliable links, efficient storage, and the freedom to inspect raw multimodal data whenever you need it.

Stop wrestling infrastructure. Start understanding your data. Spot insights faster. Build better models.